Getting the mutant home server gaming in a VM

This post is the second part in a series I am working on. See the first part here: Home Lab Upgrades: Why This Mutant Motherboard/CPU Combo Could Be the Perfect Solution)

The biggest most exciting use case for me with my new home server was a virtualised gaming environment. I’ve always wanted a living room gaming PC, but didn’t want to have a PC just for that purpose. I’ve had a home server of some sort for the last couple years, usually running some Docker containers, and I always wondered if one day, I could run Windows in a VM, with a graphics card. I can confirm that, yes, it is possible, and it works absolutely great. Before I discuss how to set this up, Let’s discuss what it’s like.

What’s it like?

In short, it’s great! In my setup, I’ve put the server in a cupboard, and have run a rather long fiber optic HDMI cable from the cupboard out to a television I have in my living room. I then control it using a small media centre style keyboard and mouse, as well as an old Xbox 360 controller to use when gaming. I’ve configured the windows VM to automatically start Steam Big Picture, and from there, I just select a game to play with the Xbox controller, and the game launches, and runs with near native performance. The media centre keyboard/mouse is mainly just to be able to click on dialog boxes and the such. So far, I’ve successfully gotten the following games to run with zero extra effort:

- Red Dead Redemption 2 + a bunch of mods

- Cyberpunk 2077

- Portal 2

- Jackbox Games (I mean, these run on a potato, but it’s still nice!)

- Far Cry 6

I’ve not really used it for any multiplayer games - and one form of trouble I expect in the future is bullshit DRM causing problems. Games that use anti-cheat I am guessing are not going to be too happy running in a virtualised environment, so I wouldn’t necessarily recommend this setup for that - but if you mostly play single player campaigns - or you’re willing to engage in a bit of… ✨Creativity✨ in how you get your games running - you should have few to no problems.

The benefits of this setup are great. There is absolutely no console to have to hide in my living room and no screaming fan to enjoy the sound of. It’s like Google Stadia, except without the latency, without the quality penalty, and most importantly, it still exists.

One slight pain point - This is a HUNGRY vm. You’re going to want to have plenty of RAM to throw at it, and you’ll want more than if you just had a Windows install on the machine natively, since you’ll need some for Proxmox, and some for all the other stuff you’ll do with Proxmox. I went with 32GB - and give 16GB to Windows. Even this feels cramped some days.

Another slight pain point - You need some way to start and stop the VM! I came up with what I thought was a rather nice solution for this. My wife and I both use Telegram, and Telegram bots are an absolute joy to write. I wrote a simple Go service that runs directly on the Proxmox host (managed by Systemd), that spins up a web server. When it receives a request with a ✨Super Secure Secret Key✨, it executes qm start <vmid> - which starts the virtual machine. I then wrote a Telegram bot which only speaks to my wife and I (using Telegram as the authentication mechanism). If it receives a command to start the VM, it starts the VM. This Telegram bot of course lives on my Proxmox node inside a container, and therefore is able to make a web request to that Go service running on the Proxmox host I mentioned earlier. You could write these as one service for sure, but my Telegram bot does a lot more than just this for me, hence the separation. I haven’t shared the code for these yet because it’s terrible, it’s really nothing special.

This setup means that I can sit on my couch, fire up a telegram chat, and press a button, and about a minute later I am sitting in front of the Steam Big Picture UI, ready to play some games. I then use the Xbox controller to navigate the Big Picture UI, and I game. It’s probably as close to a console experience as one could have.

Final pain point - If you shut down the Windows VM, the GPU gets left in a really weird high power state, and produces a lot of heat considering it is literally doing nothing. It actually seems to run cooler while the VM is running. I did not want to rely on leaving a Windows VM running all the time, so I created the worlds most sad Linux VM. It is a really stripped down Ubuntu VM, configured exactly the same as my Windows VM, except it is given 1GB of ram and 1 core, as well as having absolutely no network access. It runs no services, it hosts no websites, it plays no games, it doesn’t even have the ability to be accessed in any way. Its only task is to hold the GPU. That same Go server I described above actually constantly polls the status of the windows VM with qm status <vmid>, and if it isn’t running, it’ll start the GPU holder VM. That then spins up, initialises the GPU, and does absolutely nothing else. It sits there, lonely, and sad. When I request the Windows VM start, the GPU holder VM unceremoniously gets stopped with qm stop <vmid>, and the windows VM gets started. A neat solution that keeps the power consumption of the GPU as low as possible. Better solutions to this are definitely possible, but I like my hacky solution.

How to set this up:

I had no idea before I set this up whether it would work or not (see my previous post: Home Lab Upgrades: Why This Mutant Motherboard/CPU Combo Could Be the Perfect Solution). There’s no technical reason why GPU passthrough shouldn’t work. It’s a relatively well established technology these days. It can be finnicky, but especially on the AMD GPU side things have worked pretty well for a while, bar some strange hardware bugs affecting earlier AMD graphics cards. However, as I discussed in my previous post, the platform I picked is anything but typical. It is a CPU that should never have seen a consumer, strapped to a motherboard that would prefer to not exist, running a hacked bios holding it all together, sold on Aliexpress at an extremely discounted price. The odds definitely needed to be in my favour for everything to hold together well enough for this to work. I already had an AMD Radeon RX-6700xt from my main desktop, so I decided to test with that.

BIOS Settings

After installing the GPU, I entered the BIOS of the Erying board, in order to ensure the settings were set up as needed. These are the settings that you’ll need to tweak, should you decide to do this. Do this before you attempt to set anything up.

- Advanced > CPU Configuration > Enable Intel (VMX) Virtualisation Technology - This is what allows Proxmox to do virtualisation on your CPU

- Advanced > Graphics Configuration > Enable VT-D (This is essentially IOMMU - tech that lets you virtualise hardware like your GPU)

- Advanced > Graphics Configuration > Disable Internal Graphics (Just to keep things simple, you can re-enable it later!)

- Advanced > Graphics Configuration > Enable Above 4GB MMIO BIOS assignment

- Advanced > PCI Subsystem Settings > Enable Above 4G Decoding

- Advanced > PCI Subsystem Settings > Disable Re-Size BAR Support (This caused crashing for me)

Proxmox initial setup

When you boot into proxmox next, it will boot and display a shell on your GPU. We don’t want this. We want Proxmox to ignore the GPU completely, so that a virtual machine can initialise it. To this end, ssh into your Proxmox node for the next steps. I should note for these steps I am heavily summarizing the excellent guide you can find here: Proxmox PCI Passthrough, and here: cjalas: The Ultimate Beginner’s Guide to GPU Passthrough - taking only the steps I needed.

Firstly, you’ll want to modify /etc/default/grub, specifically the line starting GRUB_CMDLINE_LINUX_DEFAULT. Add it if it doesn’t exist.

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream,multifunction initcall_blacklist=sysfb_init video=simplefb:off video=vesafb:off video=efifb:off video=vesa:off disable_vga=1 vfio_iommu_type1.allow_unsafe_interrupts=1 kvm.ignore_msrs=1 modprobe.blacklist=radeon,nouveau,nvidia,nvidiafb,nvidia-gpu,snd_hda_intel,snd_hda_codec_hdmi,i915"Then, run update-grub.

You’ll then want to add the following lines to your /etc/modules file:

# Modules required for PCI passthrough

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfdNext, you need to blacklist your GPU. This means that Proxmox will no longer attempt to initialise your GPU, and it will effectively become useless until we pass it to a VM.

echo "blacklist amdgpu" >> /etc/modprobe.d/blacklist.conf

echo "blacklist radeon" >> /etc/modprobe.d/blacklist.confNext, you want to add your GPU to VFIO. Run lspci -v and hunt for the output that relates to your GPU. Here’s what it looks like for mine:

03:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Navi 22 [Radeon RX 6700/6700 XT/6750 XT / 6800M/6850M XT] (rev c5) (prog-if 00 [VGA controller])

Subsystem: Sapphire Technology Limited Sapphire Radeon RX 6700

Flags: bus master, fast devsel, latency 0, IRQ 139, IOMMU group 17

Memory at 4000000000 (64-bit, prefetchable) [size=256M]

Memory at 4010000000 (64-bit, prefetchable) [size=2M]

I/O ports at 4000 [size=256]

Memory at 50000000 (32-bit, non-prefetchable) [size=1M]

Expansion ROM at 50100000 [disabled] [size=128K]

Capabilities: [48] Vendor Specific Information: Len=08 <?>

Capabilities: [50] Power Management version 3

Capabilities: [64] Express Legacy Endpoint, MSI 00

Capabilities: [a0] MSI: Enable+ Count=1/1 Maskable- 64bit+

Capabilities: [100] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Capabilities: [150] Advanced Error Reporting

Capabilities: [200] Physical Resizable BAR

Capabilities: [240] Power Budgeting <?>

Capabilities: [270] Secondary PCI Express

Capabilities: [2a0] Access Control Services

Capabilities: [2d0] Process Address Space ID (PASID)

Capabilities: [320] Latency Tolerance Reporting

Capabilities: [410] Physical Layer 16.0 GT/s <?>

Capabilities: [440] Lane Margining at the Receiver <?>

Kernel driver in use: vfio-pci

Kernel modules: amdgpuNote the numbers in the beginning, 03:00.0 in my case. You’ll next want to run lspci -n -s 03:00, in order to determine the vendor ID for the GPU. It may output two - one will be the GPU, the second will be the audio subsystem of your GPU. You’ll want to create the file /etc/modprobe.d/vfio.conf with these IDs, like so

root@kevs-server:/etc/modprobe.d# cat vfio.conf

options vfio-pci ids=1002:73df,1002:ab28 disable_vga=1Then run update-initramfs -u.

Finally, fully reboot your system, and check the monitor you’ve got attached to your system. As soon as the BIOS finishes loading, you should be left with a screen that says the following

Loading Linux <whatever version of the kernel> ...

Loading initial ramdisk ...

_If so, congratulations, you’re ready to start configuring your VM!

Proxmox VM setup

It’s time to create your VM! Go ahead and hit the Create VM button in Proxmox. Don’t start the VM until the guide suggests you should. Start off by giving it a nice name.

- OS: select your OS. I had a Windows 11 ISO, so I selected that. Ensure you also select Guest OS Type

Microsoft Windows. - System: Machine should be set to

q35, Bios to OVMF (UEFI). Tick theAdd EFI Diskoption and select somewhere to store the disk. Tick theAdd TPMbox and select the disk to store your TPM on. - Disks: This is up to you, but I went with SATA, but you probably want VirtIO SCSI for better performance. If you’re running on an SSD, tick the SSD emulation box, and if your SSD supports Discard (It probably does!), tick the Discard box.

- CPU: Again, up to you, but I recommend giving it virtually all the cores in your system - and for Type you probably want to select

Host, although any will work. - Memory: Up to you, but I wouldn’t recommend Ballooning unless you’re really tight on RAM, given this a VM where you’re going to want as much performance as possible. Ballooning just means the VM can request more RAM and get it, but it requires further setup. I didn’t enable this.

- Network: Up to you

Next, it’s time to pass your graphics card through to the VM. To do this, select your newly created VM from the left in Proxmox, and select the Hardware tab. Click the Add button, and select PCI Device. Select Raw Device, and select your GPU. Tick All Functions, Primary GPU, PCI-Express, and Rom Bar.

You are also free at this point to pass through some USB ports to your soon to be running Windows VM. I for example pass through a wireless keyboard/mouse dongle, and an Xbox controller dongle. Plug these into your machine if you haven’t already, then select Add, and USB Device. I then suggest ticking Use USB Port, and selecting the ports they’re plugged in to. This means you can in future plug other stuff into these ports and it’ll just work. You can add more later, so no worries here.

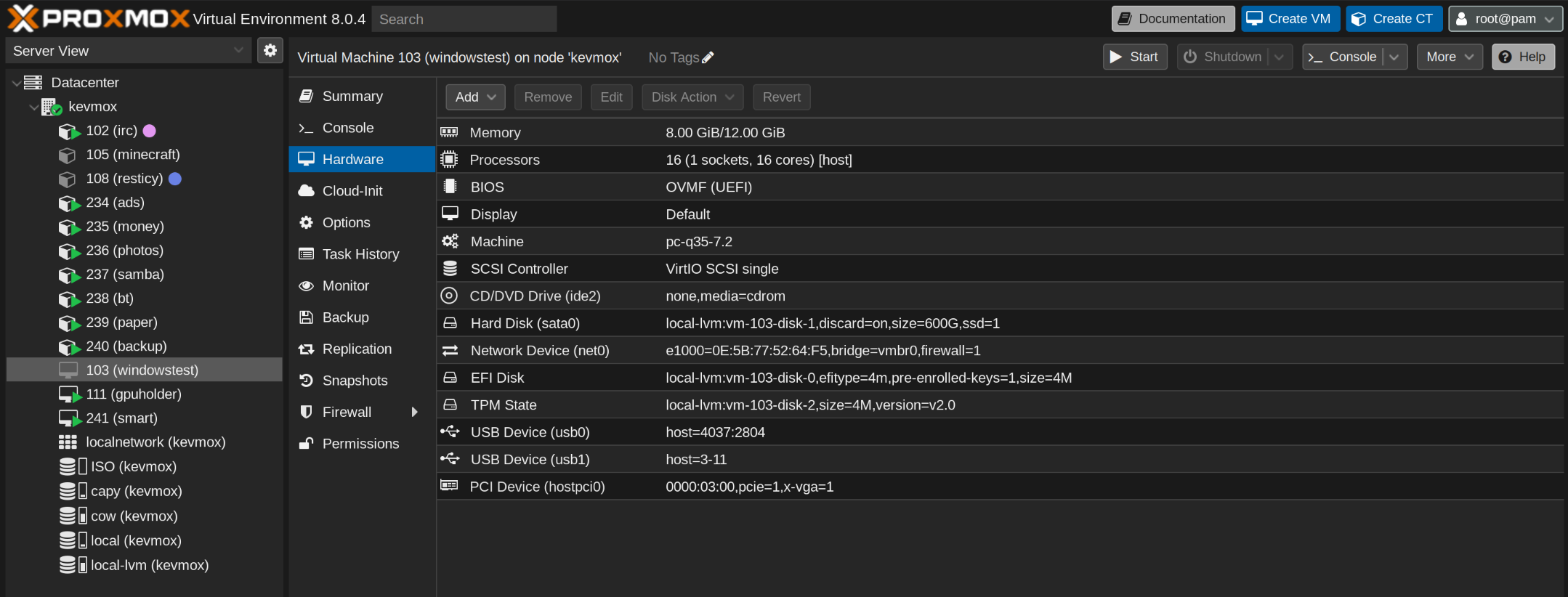

Once you’re done, you should end up with a setup that looks something like this:

And finally, it is time to start the VM, and install Windows. You’ll need to do it from a monitor you attach to the GPU, as well as with a keyboard/mouse you passed through to the VM earlier. I’m sure you don’t need help there!

The experience of others has not been as smooth as mine, so be warned should you follow my path here. People with literally identical setups to mine have failed - seemingly because some of the engineering sample CPUs are capable of it, whereas others are not. Real PC hardware will probably work more reliably.

Hacker News | LemmyLet me know what you thought! Hit me up on Mastodon at @kn100@fosstodon.org.